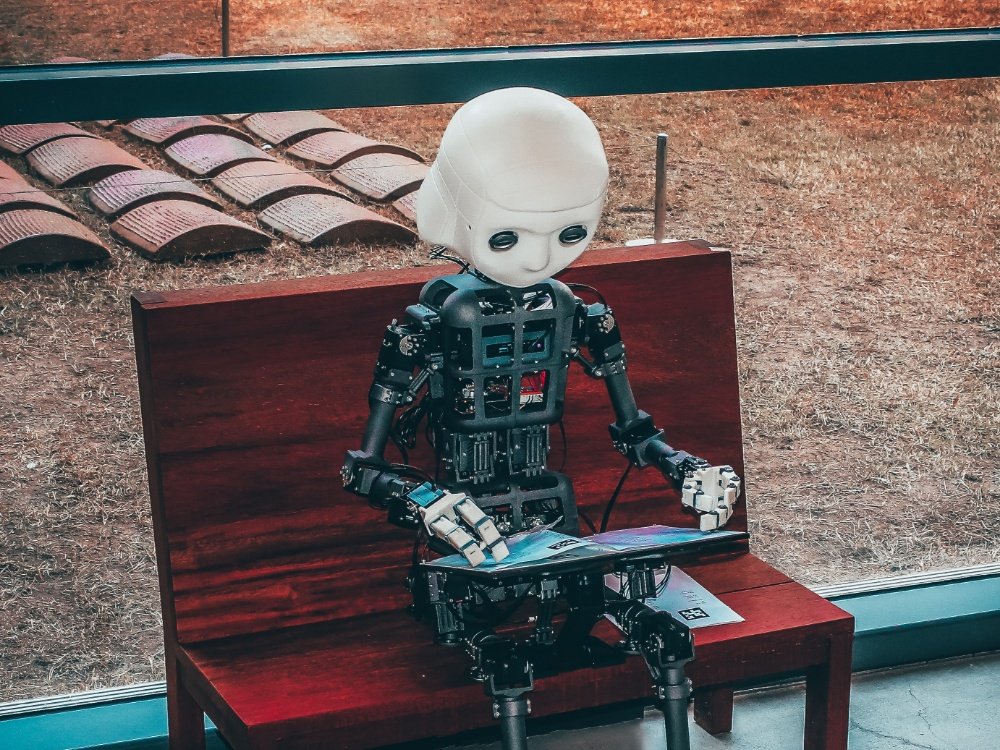

Does AI model go to therapy? Yes, I think it goes because it has too many issues with its deployment. Let’s go to introductory therapy session.

What is AI Model Deployment?

Deployment is the process of making something available for use. It can be a software application, system, or update. In case of AI model deployment, it is a trained machine learning model. Summing up, AI model deployment refers to the process of integrating a trained machine learning model or artificial intelligence model operational in a production environment.

Though a machine learning model is trained on available dataset, the deployment of the model is a bit complex task. A data scientist trains a machine learning model on historical data and validates the model to ensure required accuracy. After this, the model has to be integrated into a system where it sees new, unseen data, and make predictions or classifications on them. This generates business insights, helps in making decisions, or providing recommendations.

What are the Key Components of AI Model Deployment?

1. Preparation of a trained AI Model:

In order to come to the integration part, one has to clearly define the problem that can be solved by the resulted AI model. Training the model, evaluation and testing are the steps that follow the problem definition. After this, the model needs to be integrated into the systems to make it operational. This includes a series of steps described below.

2. Model Integration with Deployment Platform:

A deployment platform could be on-premises infrastructure, cloud services (e.g. Azure, AWS, Google Cloud Platform), or edge devices. A Tensorflow Saved Model, ONNX format, or a PyTorch model checkpoint helps to serialize the trained model. It makes saving and loading of the model convenient. And, this is Model Serialization.

Developing API endpoints are necessary in order to load the serialized trained model, and make it available for use to general public. Moreover, using API endpoints, a person or a service can interact with the model by making HTTP requests.

3. Scalability:

If you expect a high volume of requests, design scaling mechanisms for deployment platform accordingly.

4. Real-time or Batch Processing:

The model’s parameters and thus model gets updated based on how model receives data. The process is divided in a real-time process or a batch process. A real-time process example: a user requests a prediction, and a backend service sends a prediction. Then backend and ML service receives this output to update the model and its parameters. A batch process example: backend and ML service update the model after receiving a batch of data.

5. Security, Monitoring and Maintenance:

Implement necessary monitoring tools to keep track of the model’s performance, to protect the model, data, and to identify potential issues to ensure that the model runs effectively and optimally. Moreover, updating of the model regularly with new data is also necessary to improve its performance.

6. Version Control using CI/CD:

Maintain version control using Continuous Integration/Continuous Deployment (CI/CD) pipelines for tracking changes, rolling back to previous versions. This also ensures that changes reliably goes to production.

Conclusion:

A deployment process of AI model is a critical phase in the machine learning lifecycle. This is because it has to go through development and training phase to actual use in solving real-world problems. Also, key components described above can vary depending on the deployment environment. It also depends on the nature of AI model, and its practical use.